Old Prototypes

This page contains many old prototypes from back in my early hobbyist years of programming, before I started working as a game dev. These projects aren't a good indicator of my current skills, but I look back fondly on these smaller projects.

A Recreation of Ratchet And Clank

The game in the above video is available to download and play here!

Windows x64 Download

You will need a controller such as a ps4 dualshock or xbox controller to play. The controls are listed in game by clicking the "controls" button with your mouse.

As a big fan of Ratchet and Clank, I decided to try recreating my own version of the game with 3D models I found online. I then went through the process of making needed adjustments

on the models in blender, rigging them for animation with Mixamo, fine tuning the animations with an asset called Umotion, and setting up the animations to play with Unity's Animator Controller.

As more of a programmer than an artist, this project was really challenging as I attempted to branch out to more artistic concepts such as animation and UI design. I enjoyed the new challenges, and had fun

drawing the UI for this game with the pen tool in photoshop. The healthbars and weapon UI in the top left corner was all hand drawn by myself, and overall I'm pretty pleased with my programmer art! In the future I

would try to pick a consistent color palette from the start as I feel some of the reds and oranges and blues of all the combined UI may be too many different colors.

Reinforcement Learning

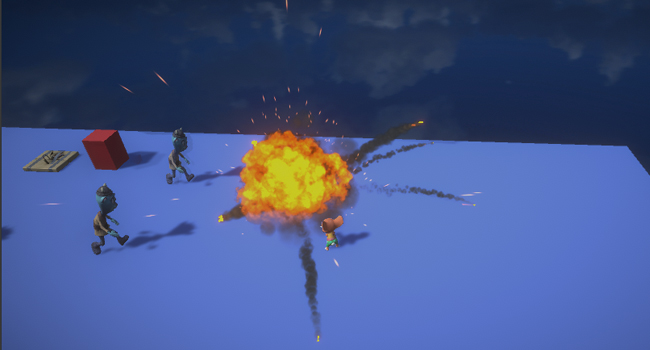

The mouse character was trained via reinforcement learning to create player inputs to avoid the blue enemies, face the blue enemies, shoot them with limited ammo, collect the red ammo packets, and also to shoot the claymore mines on the ground to kill the blue enemies with aoe damage.

This project makes use of Unity's Machine Learning framework, which is made up of a combination of tensorflow, Unity's ML Agent package, and a python api which communicates between the two.

There are three basic components to the Unity facing aspect of this framework:

1. Observations - the information the reinforcement model perceives about its environement. These observations may be values such as locations, velocities, and rotations. They can also be more abstract such as

the RGB color values of each pixel of a picture, or even bools or enumerations.

The number of these observations decides the number of input neurons for the neural network of the model.

2.Actions - all the given actions the model can take and correlates to the output neurons of the neural network. For example, the outputs

for my final model are for movement, rotation, and firing the gun.

3. Reward Signals - The model tries to maximize it's reward and you can therefore shape its behavior by adding a reward for desired behavior and

subtract rewards for undesired behavior.

Creating a decent Reward structure for my model was one of the more challenging aspects of this project. It seems that best practice is to normalize rewards on a scale of -1 to 1. There are many interesting tactics with rewards to help shape the behavior of reinforcement learning models. For example, if you add a very small negative reward over time, such as -0.02 per second, the model is incentivized to speed up its actions so that reward is not diminished over time as much. I found a good balence for this project was a reward of +0.25 for every succesfully shot enemy, +1 for collecting a red cube, -1 for letting an enemy touch you, -1 for shooting the gun and missing, and about -.001 per frame that the model is running to help speed him up.

It was extremely interesting to see how rewards shape the behavior of reinforcement learning models. It can also be quite frustrating because the models are very good at optimizing their rewards in ways that

do not produce your desired outcome. I found that it is generally best practice to only reward end goals and not micro behaviors that you think will lead to completing a goal. For example, I only reward the agent for

successfully shooting an enemy, but I do not reward him for rotating to face towards an enemy. This micro behavior of rotating to face enemies is needed for the model to shoot the enemies, and I origionally tried rewarding

this behavior as I thought it would be needed, but it ended up leading to some wacky results where the model prioritized aiming towards enemies over anything else. So, rewarding completed goals over behaviors was a key takaway

from this project and seems to align with what I've seen online for structuring reward models.

Grid based RPG

Grid based combat with multi level terrain where height gives a damage advantage. When two players face eachother in neighboring cells, they lock eyes. If other players attack a character with locked eyes, they can sneak up on the side or back for extra damage as well.

An earlier version of the prototype, showing off how the grid based movement and UI supported uneven terrain.

One of my favorite games growing up on PS2 was a tactical RPG game called Gladius. I loved the grid based combat and enthusiatically played along in this game's world of gladiator schools and arenas. You had to use the 3D environements in the arenas to gain the tactical advantage, and the hundreds of different skills and weapon types made the turn based combat system look and feel great.

My goal for this project was to begin creating something similar to Gladius. I soon realized that creating a full 3-D RPG game is an

extraordinarily large task, and quickly had to reajust my goal to something more realistic.

I decided on creating just one playable battle with simplified characters and minimal UI.This was still a huge task for me at the time,

but at least it was attainable.

Key features included creating a grid based map on multilevel terrain, implementing A star pathfinding on the grid, UI that visualizes the movement,

turn based combat, and implementing animated characters.

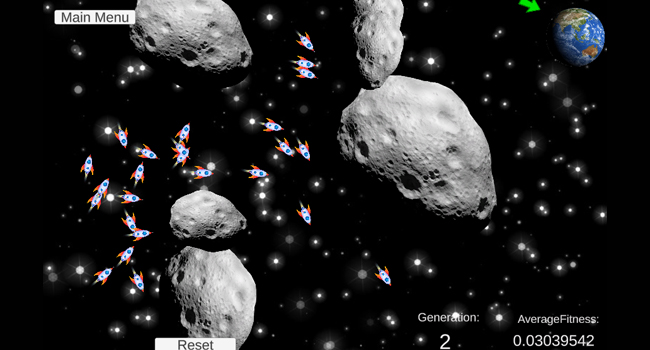

Genetic Algorithms

A single generation of spaceships using their given genes to traverse the map.

After binge watching all of the "Nature of Code" videos by Daniel Shiffman, I became very interested in recreating natural movements and systems with code. This project is a product of this interest in AI and how genetic algorithms allow for synthetic organisms to "learn" a set behavior. They do so by randomly generate a population of DNA objects which hold a randomly generated list of Genes in each DNA. These DNA objects then have their "Fitness" tested and compared amongst each other. This population of DNA then goes through a "Crossover" phase where they mate and pass on their Genes to create new DNA children with a combination of both parent's genes. In this crossover phase, the DNA with the highest fitness have a greater chance of mating so that the next generation is hopefully more fit than the previous. Once the children have their fitness tested this cycle repeates until the population converges on a set DNA pattern of genes as genetic diversity slowly dwindles. To combat this this loss of genetic diversity, a "Mutation Rate" is applied which allows each child born to have the possibility of randomly having a new different gene from its parents.

Basically, a Genetic algorithm:

generates a Random population -> population is tested for fitness -> most fit of population breed -> children are born and have their fitness tested ->

children breed, and the cycle continues.

In my Smart Rockets project one Gene in the DNA of a smart rocket is a 3 dimensional vector. The x and y axis values of this 3d vector are the direction that the 2 dimensional smart rocket will fly in. The z axis value of the gene scales the rocket's speed when traveling in that direction. So, this means each smart rocket interprets each 3d vector gene as a 2d vector direction to fly in at a set speed dictated by the z value of the gene. A smart rocket could then have DNA with 10 genes, and therefor fly in these directions at the speed dictated by each gene one after another. The DNA of these smart rockets is essentialy just a roadmap of directions to fly in at specific speeds. I chose to have each gene active for 0.25 seconds of flight arbitrarily, and this is a set value for every gene. So a DNA with 10 3-d vectors equals 2.5 seconds of total flight time in 10 different directions at 10 different speeds.

Fitness is calculated as the inverse of the distance from the target when the rocket collides with something. So, fitness aproaches zero when it's far from the target, and fitness equals 1 when it hits the target. I also weighted the x-axis distance to be more important than the y-axis distance from the target so that rockets have more fitness if they are able to find a way around an obstacle and go further to the right on the screen. Additionally, fitness is calculated at an exponential scale, so that rockets that make it a little bit closer to the target actually have a much higher fitness and are more likely to breed.